2025年的春节,比烟花爆竹还“火”的一样东西就是DeepSeek的R1大模型。

前几天到湖州玩了几天。今天坐到电脑前的第一件事就是想亲自试试这个东西。先是在网页上聊了几句,感觉不过瘾。于是想自己安装在本地运行。

打开幽兰代码本,先安装Ollama。Ollama是一个开源的大模型推理软件,并且具有非常好的模型管理功能。

我先是使用Ollama官网的安装脚本,但是在下载软件包时出错:

geduer@ulan:~$ curl -fsSL https://ollama.com/install.sh | sh>>> Installing ollama to /usr/local[sudo] password for

geduer:>>> Downloading Linux arm64 bundlecurl: (28) Failed to connect to github.com port 443 after 129139 ms: Couldn't connect to server

gzip: stdin: unexpected end of file

tar: Child returned status 1

tar: Error is not recoverable: exiting now值得说明的是,虽然脚本中的下载链接指向的是ollama.com:

"https://ollama.com/download/ollama-linux-${ARCH}.tgz${VER_PARAM}"但其实会被重定向到github,所以上面的错误信息说无法连接到github。试了几次都不成功后,我换了一种方法,手工把文件下载下来,再复制到幽兰上。

首先执行wget https://ollama.com/install.sh把安装脚本下载到本地,然后略作编辑:

status "Installing ollama to $OLLAMA_INSTALL_DIR"

$SUDO install -o0 -g0 -m755 -d $BINDIR

$SUDO install -o0 -g0 -m755 -d "$OLLAMA_INSTALL_DIR"

if test -e ollama-linux-${ARCH}.tgz; then

status "Expanding local file now"

$SUDO tar -xzf ollama-linux-${ARCH}.tgz -C "$OLLAMA_INSTALL_DIR"

else

status "Downloading Linux ${ARCH} bundle"

curl --fail --show-error --location --progress-bar \ "https://ollama.com/download/ollama-linux-${ARCH}.tgz${VER_PARAM}" | \

$SUDO tar -xzf - -C "$OLLAMA_INSTALL_DIR"

fi测试本地文件的几行是我修改的。

这样修改后,再执行脚本,便顺利安装了。

>>> Install complete. Run "ollama" from the command line.接下来,便可以通过ollama命令来使用ollama了。

geduer@ulan:~$ ollama

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

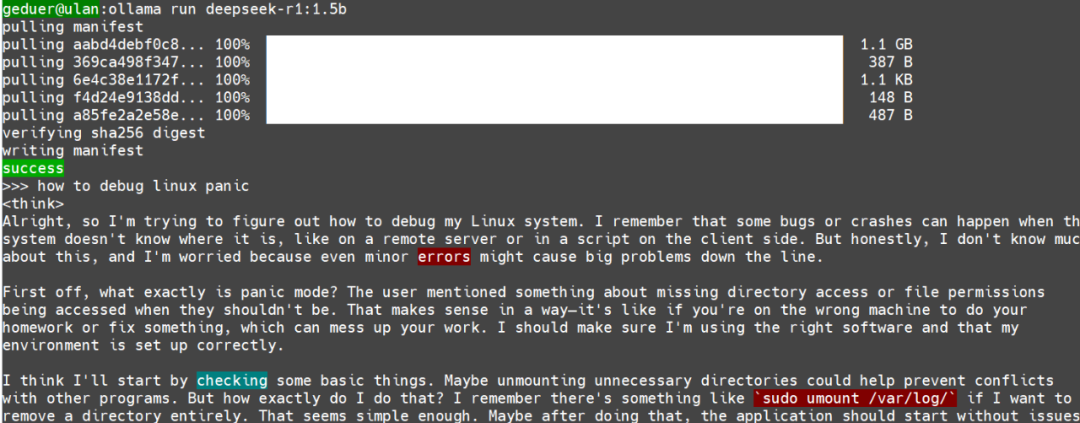

Use "ollama [command] --help" for more information about a command.可以使用pull命令下载模型,也可以直接使用run命令下载和运行模型。我直接使用run命令,担心下载速度不够,先下载一个小的1.5b模型。

ollama run deepseek-r1:1.5b。

没想到下载速度还挺快,大约十几分钟就下载好了。而且成功运行起来了。

geduer@ulan:ollama run deepseek-r1:1.5b

pulling manifestpulling aabd4debf0c8... 100% ▕█████████████████████████████████████████████████████████████████▏ 1.1 GB

pulling 369ca498f347... 100% ▕█████████████████████████████████████████████████████████████████▏ 387 B

pulling 6e4c38e1172f... 100% ▕█████████████████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕█████████████████████████████████████████████████████████████████▏ 148 B

pulling a85fe2a2e58e... 100% ▕█████████████████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

>>>

我随便提了一个问题:how to debug linux panic

想了大约1秒钟后,回答开始了,速度还挺快:

>>> how to debug linux panic<think>

Alright, so I'm trying to figure out how to debug my Linux system. I remember that some bugs or crashes can happen when thsystem doesn't know where it is, like on a remote server or in a script on the client side. But honestly, I don't know mucabout this, and I'm worried because even minor errors might cause big problems down the line.

First off, what exactly is panic mode? The user mentioned something about missing directory access or file permissionsbeing accessed when they shouldn't be. That makes sense in a way—it's like if you're on the wrong machine to do yourhomework or fix something, which can mess up your work. I should make sure I'm using the right software and that myenvironment is set up correctly.

I think I'll start by checking some basic things. Maybe unmounting unnecessary directories could help prevent conflictswith other programs. But how exactly do I do that? I remember there's something like `sudo umount /var/log/` if I want toremove a directory entirely. That seems simple enough. Maybe after doing that, the application should start without issues

Then, I've heard about using tools like `-l` and `-nul` for logging file changes. So maybe running something like `ls -l--lrat` could show me which files are changing in real-time. But wait, I'm not sure how to use that correctly. Should it bon the root directory or specific directories? I think it should start from the root because otherwise, if I specify adifferent place, the logs won't match.我是使用幽兰代码本做试验的。ollama不支持Mali GPU,所以是完全使用CPU做的推理。基本占满了8个CPU。使用gdb附加到关键的计算进程,可以看到多个线程都在使用ggml库做大矩阵乘法。

在DeepSeek的回答中,很容易感受到几个明显特征:

- 使用第一人称,比如I think,I’ve heard about 等等

- 呈现思考的过程,一开始是

,开始回答后也会陈述思路,比如“首先,我需要理解为什么会有这个情况。或许问题出在路由器的配置上,或者是在如何连接网络和数据包转换方面出现了问题。让我一步步分析一下可能的原因。” - 场景化,会自动把前面的问题当作场景信息,比如“Alright, so I’m trying to figure out what “ollama” means in this context. ”

单凭以上三个特征,真的让人感受到DeepSeek大模型真的更像“人类”了,仿佛学会了人类的思考方法。果然名不虚传!大模型的进步速度实在太快了,不断推陈出新,“探索未至之境”……

本文转载自微信公众号:https://mp.weixin.qq.com/s/hcGUdaNE49G71nOd_J1Gbw

最后编辑:zuoyingying 更新时间:2025-12-23 14:22